Tuning Songs Instead of My Guitar

In 1834 Johann Heinrich Scheibler recommended using 440 Hz as the standard frequency for A4. For various reasons a lot of music deviates from that. Deviating from 440 Hz has its artistic merits and can give music a different feeling and timbre.

It’s an annoyance if you have to retune your instrument slightly for every song you want to play along with.

It is possible to shift the pitch of a piece of music instead, and I have written a pitch shifter called Timestretch Player to do just that. But finding the exact pitch offset is still tedious.

So being fed up with retuning my guitar to play along with some song supposedly tuned to match the pitch of an anvil being struck with a hammer in the intro I decided to build myself a little tool to automate the process.

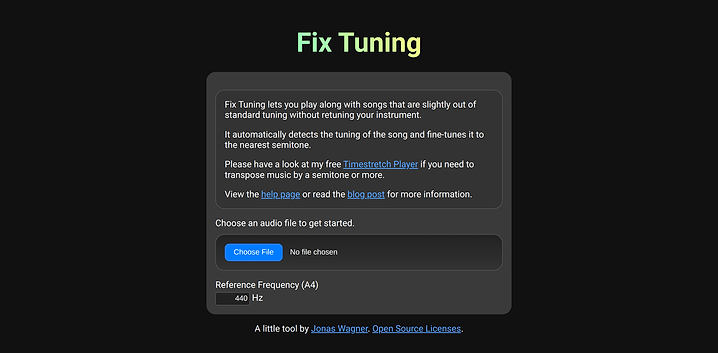

Fix Tuning automatically detects the tuning offset from a reference pitch (usually 440 Hz) in cents (¹⁄₁₀₀ of a semitone) and corrects it by slightly changing the playback speed.

Implementation

The pitch offset detection works by detecting the fundamental frequency of harmonic signals in the audio file. It then calculates their delta from the closest note in the 12 tone equal temperament scale. Finally it calculates the pitch offset that will minimize the deltas.

The pitch-detection approach is loosely inspired by Noll, A. M. (1970). Pitch determination of human speech by the harmonic product spectrum, the harmonic sum spectrum and a maximum likelihood estimate.

Because the changes in pitch required to correct the offset are fairly small, I’ve opted to correct them by changing the playback speed rather than using a more advanced pitch-shifting method. In my opinion, the 3% difference in speed required to correct an offset of half a semitone is less noticeable than artifacts introduced by changing the pitch independently of the tempo. For high-quality resampling I’m using the rubato crate.

The DSP code is written in Rust and compiled to WebAssembly, while the web ui is built with plain typescript wired up using vite and wasm-pack.

Limitations

The pitch detection assumes a 12 tone equal temperament scale and doesn’t account for key or tonal center. It simply tunes the audio to align most closely with a 12 tone scale based on the reference frequency.

While the pitch detection is fairly robust due to averaging over the entire song, it will still struggle with music that is mostly atonal.

Most testing has been done with a small number of songs and short synthetic snippets. For the songs I’ve established the ground truth by ear. Hopefully good enough for quick validation, not good enough for fine tuning.

Lastly the web audio api used to decode the files resamples the decoded audio to sample rate it is internally using. This isn’t ideal but I think negligible in practice.

Why no AI?

Machine learning or “ai” would certainly lend itself to this problem. Already back in 2018 CREPE has shown that convolutional neural networks are well suited for pitch estimation. End-to-End Musical Key Estimation Using a Convolutional Neural Network shows that estimating the tonal center is possible as well. Estimating the tonal center would be fairly difficult with my current approach as it involves a lot of cultural and musical conventions that are difficult to capture in a simple algorithm.

However building up a suitable dataset for this use case, training and testing different architectures is quite a bit of work. Running inference in a web browser also tends to be non-trivial.

So I think given the current scope of the project going with a simple hand coded algorithm was the right call.

Next Steps

It could be interesting to integrate the pitch offset detection into my Timestretch Player. I’m also working on another guitar tuner, there “Tune to Song” could be a nice feature.

Try Fix Tuning

Try Fix Tuning