Desktop rdiff-backup Script

Updated for Ubuntu 15.10.

I have recently revamped the way I backup my desktop. In this post I document the thoughts that went into this. This is mostly for myself but you might still find it interesting.

To encrypt or not to encrypt

I do daily incremental backups of my desktop to an external harddrive. This drive is unencrypted.

Encrypting your backups has obvious benefits - it protects your data from falling into the wrong hands. But at the same time it also makes your backups much more fragile. A single corrupted bit can spell disaster for anything from a single block to your entire backup history. You also need to find a safe place to store a strong key - no easy task.

Most of my data I'd rather have stolen than lost. A lot of it is open source anyway. :)

The data that I'd rather lose than having it fall into the wrong hands (mostly keys) is stored and backed up in encrypted form only. For this I use the gpg agent and ecryptfs.

Encrypting only the sensitive data rather than the whole disk increases the risk of it being leaked. Recovering those leaked keys will however require a fairly powerful adversary which would have other ways of getting his hands on that data anyway so I consider this strategy to be a good tradeoff.

As a last line of defense I have an encrypted disk stored away offsite. I manually update it a few times a year to reduce the chance of loosing all of my data in case of a break in, fire or another catastrophic event.

Before showing you the actual backup script I'd like to explain you to why I'm back to using rdiff-backup for my backups.

Duplicity vs rdiff-backup vs rsync and hardlinks

Duplicity and rdiff backup are some of the post popular options to do incremental backups on linux (ignoring the more enterprisey stuff like bacula). Using rsnapshot which is using rsync and hardlinks is another one.

The main drawback to using rsync and hardlinks is that it stores full copies of every when it changes. This can be a good tradeoff especially when fast random access to historic backups is needed. This combined with snapshots is what I would most likely use for backing up production servers, where getting back some (or all) files of a specific historic version as fast as possible is usually what is needed. For my desktop however incremental backups are more of a backup of a backup. Fast access is not needed but I want to have the history around just in case I get the order of the -iname and -delete arguments to find wrong again without noticing.

Duplicity backs up your data by producting compressed (and optionally encrypted) tars that contain diffs of a full backup. This allows it to work with dumb storage (like s3) and makes encrypted backups relatively easy. However if even just a few bits get corrupted any backups after the corruption can be unreadable. This can be somewhat mitigated by doing frequent full backups, but that takes up space and increases the time needed to transfer backups.

rdiff-backup works the other way around. It always stores the most recent version of your data as a full mirror. So you can just cp that one file you need in a pinch. Increments are stored as 'reverse diffs' from the most current version. So if a diff is corrupted only historic data is affected. Corruption to a file will only corrupt that file, which is what I prefer.

The Script

Most backup scripts you find on the net are written for backing up servers or headless machines. For backing up Desktop Linux machines the most popular solution seems to be deja-dup which is a frontend for duplicity.

As I want to use rdiff-backup I hacked together my own script. Here is what it roughly does.

- Mounts backup device by label via udisks

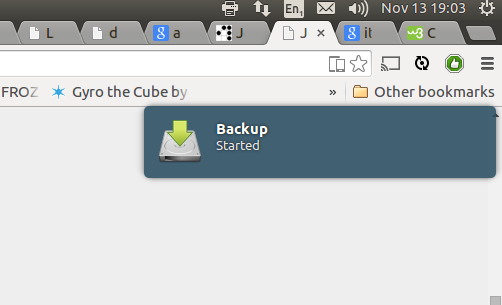

- Communicates start of backup via desktop notifications using notify-send

- Runs backup via rdiff-backup

- Deletes old increments after 8 weeks

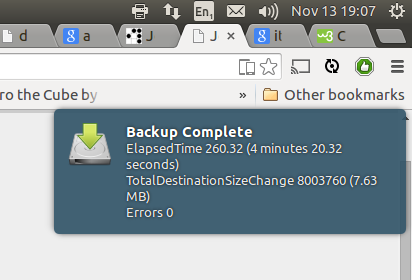

- Communicates errors or success via desktop notifications.

#!/bin/bash

BACKUP_DEV_LABEL="backup0"

BACKUP_DEV="/dev/disk/by-label/$BACKUP_DEV_LABEL"

BACKUP_DEST="/media/$USER/$BACKUP_DEV_LABEL/fortress-home"

BACKUP_LOG="$HOME/.local/tmp/backup.log"

BACKUP_LOG_ERROR="$HOME/.local/tmp/backup.err.log"

# delay backup a bit after the login

sleep 3600

# unmount if already mounted, ensures it's always properly mounted in /media

udisksctl unmount -b $BACKUP_DEV

# Mounting disks via udisks, this doesn't require root

udisksctl mount -b $BACKUP_DEV 2> $BACKUP_LOG_ERROR > $BACKUP_LOG

notify-send -i document-save Backup Started

rdiff-backup --print-statistics --exclude /home/jonas/Private --exclude MY_OTHER_EXCLUDES $HOME $BACKUP_DEST 2>> $BACKUP_LOG_ERROR >> $BACKUP_LOG

if [ $? != 0 ]; then

{

echo "BACKUP FAILED!"

# notification

MSG=$(tail -n 5 $BACKUP_LOG_ERROR)

notify-send -u critical -i error "Backup Failed" "$MSG"

# dialog

notify-send -u critical -t 0 -i error "Backup Failed" "$MSG"

exit 1

} fi

rdiff-backup --remove-older-than 8W $BACKUP_DEST

udisks --unmount $BACKUP_DEV

STATS=$(cat $BACKUP_LOG|grep '^Errors\|^ElapsedTime\|^TotalDestinationSizeChange')

notify-send -t 1000 -i document-save "Backup Complete" "$STATS"

This script runs whenever I login. I added it via the Startup Applications settings in Ubuntu.

The backup ignores the ecryptfs Private folder but does include the encrypted .Private folder thereby only backing up the cipher texts of sensitive files.

I like using disk labels for my drives. The disk label can easily be set using e2label:

e2label /dev/sdc backup0The offsite backup I do by manually mounting the LUKS encrypted disk and running a simple rsync script. I might migrate this to amazon glacier at some point.

I hope this post is useful to someone including future me. ;)